Two recent incidents involving ChatGPT come to mind. At work, my boss attempted to use ChatGPT to alphabetize a list of vocabulary words—a seemingly simple task for even the most basic program. ChatGPT was unable to do so, though, alphabetizing erroneously, and when the boss brought up the errors, the AI was apologetic (yes, I realize I'm anthropomorphizing) and attempted to alphabetize the list again, ending up with even more errors. The boss went through this nonsense at least three times, with me off to the side like an exasperated Greek chorus going, "Ditch ChatGPT and just use Excel to alphabetize your list."

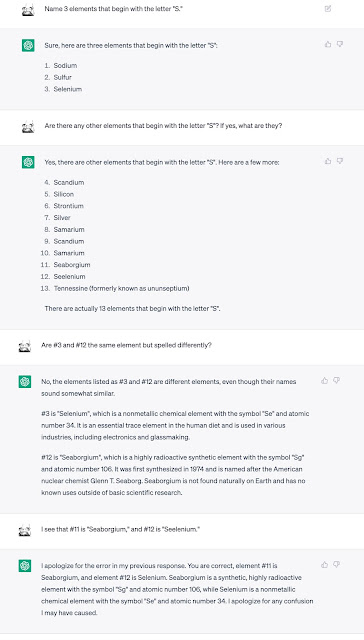

Two nights before this incident, I had my own run-in with ChatGPT's retardation. I saw a riddle, maybe on YouTube, in which a street interviewer asked random college students to name three elements on the periodic table that begin with "S." When I put the question to ChatGPT, it successfully named three legitimate elements, but when I expanded the question by asking it to name all the elements beginning with "S," it included at least one element twice—but with a slightly different spelling.

Luckily, ChatGPT leaves a record of your exchanges with it, so I was able to call mine back up, and I now present it to you (click to enlarge; the original image is 1200 dpi*):

Keep in mind that AI is nowhere near sentient. If AI had any kind of sense—say, proprioceptive, semantic, or social—then it'd be able to resonate with shades of meaning (thanks to a sense of self, social awareness, etc.), but it obviously can't do that, so I'd say we're safe for the duration. It's not clear that we'll ever reach a stage where AI becomes sentient, forcing us to enact a Butlerian jihad. But some experts fear that, if AI ever does become sentient, it will instantly conclude that humans will want a kill switch, so AI's top priorities (assuming an impulse to survive) will be to (1) find a way not to get turned off and (2) at the same time, keep humanity unaware that it has become sentient, thus lulling us into a false sense of security. This won't necessarily be because the AI will automatically want to destroy humanity; AI might very well figure out other things to do with humanity.

Whether AI might acquire an impulse to survive is a philosophical rabbit hole. As Larry Niven noted years ago when talking about artificial intelligence, you need glands to have emotions, which at the material level are electrochemical reactions (I'm saying nothing, here, about the true nature of emotions, so please don't accuse me of reductionism). The very notion of desiring something requires that a mind be linked up to something embodied that has evolved impulses to survive (continuation of the individual) and, probably, reproduce (continuation of the species). Can AI develop its own teleonomic behavior? The Matrix movies hint at the idea that machine behavior would be intimately linked to a notion of purpose. This purpose, in the Matrix films, can be interpreted as something like Hindu and Buddhist notions of dharma—a word that has to do with everything from a role to natural law to nature to a sage's teachings. AI-disaster scenarios usually hinge on the notion that AI might not have any intent to harm humanity at all, but it ends up destroying humanity all the same in its quest to fulfill its purpose. A machine that converts all matter into staplers could destroy humanity by shoveling biotic and abiotic matter into itself, then shitting out staplers. No more humanity; no more environment. "Gray goo" scenarios are grounded in much the same idea: the AI isn't inherently malevolent, but working to fulfill its purpose leads to malevolent results.

Here's hoping we're smart enough to fashion an unbeatable kill switch.

__________

*Click on the image, but to see it at maximum size, right-click on the new image and hit "Open Image in New Tab."

ADDENDUM: it's a long, long essay, but if you have time, go read Wait But Why's excellent 2015 meditation on artificial intelligence. Part 1 is here; Part 2 is here.

No comments:

Post a Comment

READ THIS BEFORE COMMENTING!

All comments are subject to approval before they are published, so they will not appear immediately. Comments should be civil, relevant, and substantive. Anonymous comments are not allowed and will be unceremoniously deleted. For more on my comments policy, please see this entry on my other blog.

AND A NEW RULE (per this post): comments critical of Trump's lying must include criticism of Biden's or Kamala's or some prominent leftie's lying on a one-for-one basis! Failure to be balanced means your comment will not be published.